Your approach with several camlocate should works but it can be be with only one camlocate.

You don t need to use snapshot_position_offset parameter because your workplan is always at the same height. snapshot_position_offset parameter is used to offset the Z position of the workplan.

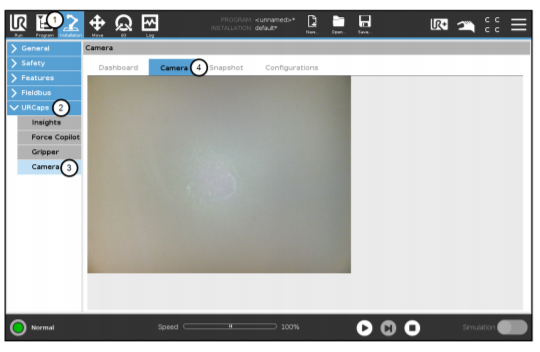

What you need is a to use the ignore_snapshot_position option of the camlocate note and remove the option to automatically move the robot to the snapshot position. This way the robot can enter the camlocate note from any position. The robot take a picture from the position he had before entering the camlocate and detect the objects on the workplan.

You can put the camlocate node in a loop which bring the robot under each position of the workplan and enter the camlocate.

Let say that you devide your workplan in 6 areas, the program would look something like this:

i=0

While i<6

MoveJ

Switch Case (i)

case 0

Waypoint_0

...

...

case 5

Waypoint_5

Camlocate

...Picking instruction...

i=i+1

As the calibration board have smaller dimension compare to your complete workplan, you may have some error on the positioning of the workplan fare from where was the calibration board (Calibration board may not be exactly parallel with the workplan. Also positioning of the calibration board have some little error.). This may result if some detection issues or unexpected variation of Z picking position.

bcastets

bcastets

I added the sample program on the DoF post:

https://dof.robotiq.com/discussion/1430/camera-detect-object-on-various-plane-and-from-various-camera-location

Regarding the error, on which node to you get this error ? Could you share your program ?

I suppose you have a while command for which the while condition is not defined.

Hi!

I´m having problems to build a program which could find parts from big table by Camera Locate and load them to CNC-machine. So far I have managed to use only one snapshot point and one small corner of the table where the metal pieces are placed. I tried to create more feature points and snapshot points but program was not working as intended and was a mess since I had 10 dirrerent spots to locate parts.

I saw this post ( https://dof.robotiq.com/discussion/1430/camera-detect-object-on-various-plane-and-from-various-camera-location) about * snapshot_position_offset command and wondered if this is the easiest way to solve my problem. The program should pick all pieces from one snapshot point and then move to next location until the whole table is empty. If this command is the solution to my problem, should I add some If statement to get the robot to move to next place when nothing is found from first place? Or is there some other easier way do this whole thing?

Grateful for all answers!

JMH